AI Governance in ITSM: Policies and Frameworks for Responsible Automation

Understanding AI Governance: A Necessity in Modern ITSM

As artificial intelligence transforms Information Technology Service Management (ITSM), it brings both immense opportunity and growing complexity. Businesses seek faster resolutions, predictive analytics, and optimized operations — but with these advancements come new challenges around transparency, ethics, and compliance. AI governance, ITSM policies, responsible AI automation, robust AI compliance frameworks, and enterprise AI ethics are no longer optional. They are foundational elements for organizations that want to harness AI’s power while upholding trust, accountability, and regulatory alignment.

This blog explores the evolving landscape of AI governance in ITSM environments. We’ll analyze frameworks, ethical considerations, and actionable steps to ensure your AI-driven automation is responsible and future-proof.

The Rising Importance of AI Governance in ITSM

AI is embedded across today’s service desks, from intelligent ticket triage and chatbots to predictive escalation and process orchestration. However, unlike traditional automation, AI models can make dynamic decisions, learn from sensitive data, and potentially propagate biases. Without strong AI governance, ITSM tools risk producing unreliable, opaque, or even unethical outcomes.

Key drivers for establishing AI governance in ITSM include:

- Regulatory pressure: Frameworks like the EU AI Act and industry standards require organizations to manage AI risk proactively.

- Reputational risk: Faulty AI can erode customer trust and damage brand reputation, especially if errors or bias go unchecked.

- Operational control: Strong governance ensures AI aligns with business goals and ITIL principles, minimizing service disruptions.

- Scalability: As organizations adopt more AI-driven automations, consistent policies and frameworks support safe, repeatable growth.

Responsible AI automation is not just about compliance — it's about unlocking sustainable value from emerging technologies.

Core Principles of AI Governance for ITSM

Effective AI governance is underpinned by a set of core principles that inform every decision, from model selection to ongoing monitoring. Consider the following principles as a roadmap for your ITSM environment:

- Transparency: Ensure that automated decisions are explainable, and users understand AI’s role in service delivery.

- Accountability: Assign clear responsibility for AI models, their outputs, and their impacts on business operations.

- Fairness and Bias Mitigation: Regularly test AI for bias, especially if it touches user experience, HR, or customer-facing processes.

- Robustness and Security: AI systems must be resilient against errors, data drift, adversarial manipulation, and cyber threats.

- Compliance and Ethics: Adhere to local regulations, data privacy laws, and ethical guidelines governing artificial intelligence.

Establishing these principles aligns your AI initiatives with both ITSM best practices and enterprise AI ethics mandates.

Frameworks for AI Governance in ITSM

Organizations benefit from adopting proven AI governance frameworks that structure how automation is designed, implemented, and maintained within ITSM tools. While each business’s approach will be unique, leading frameworks typically feature these elements:

- Draft a comprehensive AI policy addressing permitted use cases, ethical standards, compliance checkpoints, escalation protocols, and user engagement expectations.

- Evaluate AI projects by expected risk and impact — for example, by their influence on critical ITSM workflows, employee privacy, or customer outcomes. Classify AI systems accordingly and devise mitigation strategies for high-risk tools.

- Apply a lifecycle approach to AI assets: from ideation and design to training, deployment, monitoring, and decommissioning. This guarantees continuous oversight, not just one-time reviews.

- Implement dashboards, documentation, and audit trails. Make sure administrators, auditors, and users understand how and why AI acts in automated processes.

- Engage cross-functional teams (IT, legal, HR, compliance, and business leadership) in every stage of AI deployment. Regular communication fosters shared understanding and trust.

Examples of reference frameworks include NIST’s AI Risk Management Framework, ISO/IEC 23894:2023, and the OECD AI Principles. These can be tailored to meet the unique analytics, compliance, and ethical needs of ITSM platforms.

Policies for Responsible AI Automation in ITSM

Beyond broad frameworks, specific ITSM policies are vital for operationalizing responsible AI automation. Below are essential policy domains every organization should address:

Codifying these policies in your ITSM handbook ensures everyone — from AI development teams to front-line support — understands best practices and escalation paths.

Ethical Considerations for Enterprise AI in ITSM

Ethics is at the center of AI governance for ITSM. As automation becomes more sophisticated, business leaders must explicitly address the potential for harm, exclusion, or systemic bias. Consider these key ethical considerations for enterprise AI:

- Bias and Discrimination: Audit training data and output to detect and address gender, racial, or other biases that could harm users or organizations.

- Transparency with Impacted Parties: Be forthright about how AI influences user experiences — such as how tickets are prioritized or how incident root causes are identified.

- Human Oversight: Ensure AI decisions, especially those with substantial impact, are subject to human review and override.

- Equity in Service: Avoid designs that systematically disadvantage particular groups (e.g., by offering slower service to non-preferred languages or regions).

- Privacy by Design: Limit AI access to only the data absolutely necessary for a given automation, and adopt privacy-preserving techniques wherever possible.

By embedding these values into ITSM policies and compliance frameworks, businesses uphold enterprise AI ethics and foster stronger, trust-based relationships with stakeholders.

Regulatory Compliance: Staying Ahead of Evolving Rules

As lawmakers increasingly focus on AI’s role in IT and business processes, regulatory compliance has become a cornerstone of responsible AI in ITSM. Business leaders should track both global and local legislation impacting their operations, such as:

- EU AI Act: Imposes varying requirements based on risk, including mandatory risk assessments, transparency obligations, and human oversight for high-risk AI.

- GDPR and Data Privacy Laws: Dictate how data can be collected, stored, and processed — with special attention to the rights of individuals in automated decisions.

- Industry-Specific Standards: Healthcare, finance, and public sectors may face additional controls around sensitive data, fairness, or auditability.

- SOC 2, ISO/IEC 27001, and NIST Standards: Cybersecurity best practices extend to AI automation and must be integrated into ITSM policy frameworks.

Proactive organizations don’t just react to new rules — they anticipate regulatory shifts by regularly updating policies, documentation, and supplier requirements.

Practical Steps for Business Leaders: Building a Responsible AI Program

Moving from theory to action, business leaders can champion responsible AI adoption in ITSM with these steps:

- Establish Clear Ownership: Designate senior leaders (CIO, CISO, or Chief Data Officer) accountable for AI governance in ITSM. Create cross-functional committees for ongoing oversight.

- Invest in Training: Educate ITSM teams, developers, and end-users about responsible AI practices, potential risks, and compliance obligations.

- Inventory Your AI Systems: Catalog every AI-driven automation, from chatbots to intelligent routing. Document each system’s data sources, model logic, and impact areas.

- Assess and Prioritize Risk: Use risk matrices to focus governance on the most critical and high-impact AI workflows.

- Implement Continuous Monitoring: Leverage tools that provide real-time auditing, drift detection, and explainability for automated systems.

- Foster a Culture of Transparency: Encourage open communication about AI failures and lessons learned. Celebrate teams that surface issues before they escalate.

- Periodically Review and Update Policies: Technology and regulations will continue to evolve. Conduct annual reviews of your AI governance framework and make iterative improvements.

These steps ground your responsible AI automation strategy in real-world impact and long-term success.

Case Study: Implementing Responsible AI Automation in a Global ITSM Platform

Consider a multinational enterprise that introduces AI-powered chatbot and automated ticket triage across its global ITSM system. Here’s how AI governance and compliance frameworks ensure responsible automation:

- Pre-Deployment Validation: The AI team tests the models with diverse ticket scenarios to spot and address potential bias and inaccuracies, especially for non-English speakers.

- Transparent Communication: Employees are notified when they interact with AI and offered options for human support where needed.

- Ongoing Monitoring: AI performance is tracked using KPIs like response accuracy, user satisfaction, and error rates; deviations prompt investigation and retraining.

- Periodic Audits: Internal reviews and third-party audits ensure that models meet enterprise AI ethics standards and comply with evolving privacy laws.

- Continuous Improvement: Feedback loops with users and stakeholders drive regular enhancements to policies, data quality, and model robustness.

This approach not only reduces incident response times and operational costs but also builds trust and confidence with employees and regulators.

Challenges and Future Trends in AI Governance for ITSM

While progress is rapid, organizations face ongoing hurdles in balancing innovation with governance:

- Keeping Up with Regulations: Patchwork global laws and data sovereignty rules require agility and dedicated legal support.

- Ensuring Explainability: As AI models grow more complex (deep learning, large language models), explaining their decisions becomes more difficult but no less necessary.

- Scaling Policies Across Geographies: Multinational ITSM platforms must adapt governance to local cultures, languages, and norms.

- Maturing Best Practices: Community standards for AI in ITSM are still emerging, requiring organizations to iterate and share lessons learned.

- Automating Governance: New AI tools can themselves help oversee, document, and audit automated workflows — closing the loop on continuous improvement.

Forward-thinking leaders should treat AI governance as a dynamic function that evolves alongside the capabilities and complexity of ITSM platforms.

Bridging AI Governance with ServiceNow’s Enterprise Capabilities

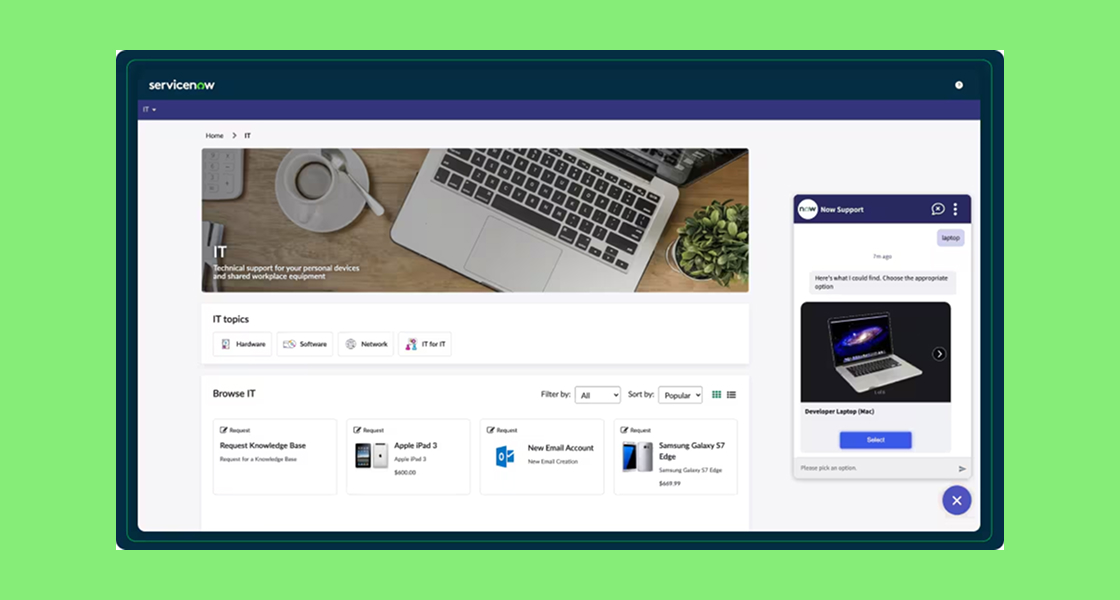

AI governance in ITSM is not just a theoretical framework — it requires platforms capable of operationalizing governance principles across complex, global enterprises. This is where ServiceNow stands out.

As the leading platform for digital workflows, ServiceNow already helps organizations unify ITSM, CSM, HR, security, and operations under a single architecture. With AI and automation embedded natively, ServiceNow enables faster resolutions, predictive insights, and hyperautomation while ensuring scalability and resilience. Its unified data model and enterprise-grade compliance certifications (ISO, SOC, FedRAMP, among others) provide a trusted foundation for regulated industries like banking, healthcare, and government.

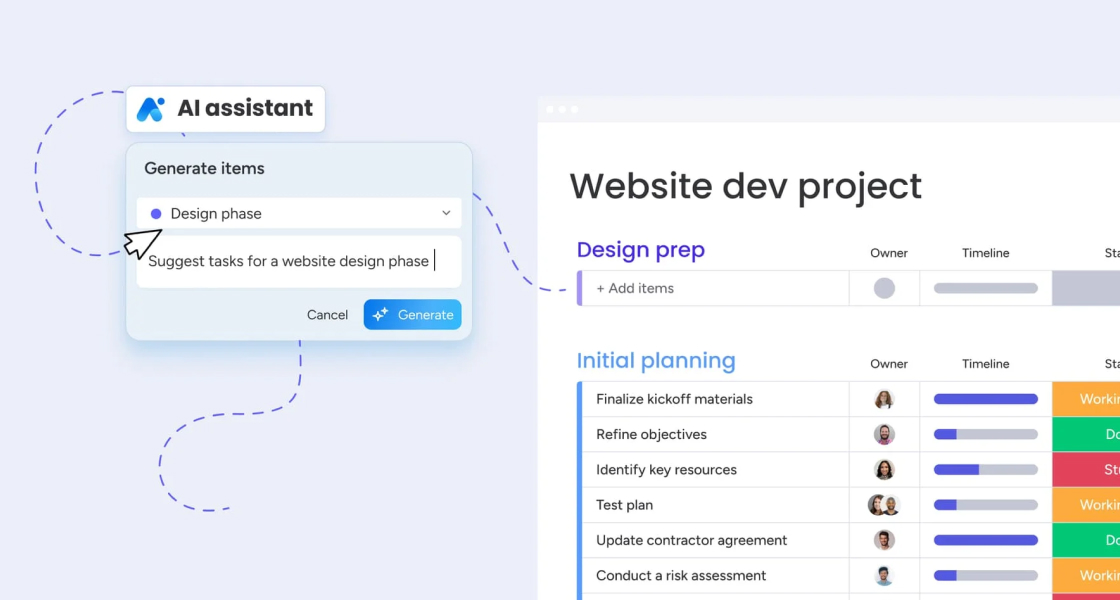

To extend these capabilities, ServiceNow AI Control Tower acts as the governance and orchestration layer for enterprise AI. It allows organizations to:

- Centralize oversight: Manage both native ServiceNow AI models (e.g., Now Assist for ITSM, CSM, and HR) and third-party AI solutions within a single control plane.

- Embed compliance: Apply ethical and regulatory guardrails into workflows, ensuring that automations meet standards like GDPR, the EU AI Act, and sector-specific regulations.

- Monitor in real time: Track bias, drift, explainability, and performance of AI models to maintain accountability and reliability across business functions.

- Scale responsibly: Enable consistent adoption of AI across the enterprise without sacrificing transparency, governance, or ITIL alignment.

By combining governance frameworks with ServiceNow’s AI Control Tower, enterprises can transform AI governance from a policy document into a living practice — delivering measurable trust, efficiency, and compliance at scale.

Conclusion: Building Trust Through Responsible AI Automation in ITSM

The integration of AI into ITSM is reshaping enterprise service delivery and user expectations. However, this transformation comes with critical responsibilities. AI governance, robust ITSM policies, and comprehensive AI compliance frameworks are central to ensuring responsible AI automation. Enterprise AI ethics must be at the heart of every automation initiative — guiding organizations toward transparent, fair, and compliant outcomes.

By proactively establishing governance frameworks, defining clear policies, addressing ethical considerations, and staying ahead of regulatory demands, business leaders create an environment where AI delivers real value without compromising trust or accountability.

Now is the time to assess your organization’s AI maturity and governance posture. Start by defining your core AI values, evaluating existing automations, and building cross-functional teams to monitor and enhance responsible AI adoption. The foundations you set today will fuel your ITSM success — responsibly and sustainably — for years to come.

Ready to strengthen your AI governance in ITSM? Reach out to our experts to discuss custom frameworks and policies that fit your enterprise needs.